Content

ADO.NET SQL Server

In This Section

See also

SQL Server Security

In This Section

Related Sections

See also

Overview of SQL Server Security

In This Section

See also

Authentication in SQL Server

Authentication Scenarios

Login Types

Mixed Mode Authentication

External Resources

See also

Server and Database Roles in SQL Server

Fixed Server Roles

Fixed Database Roles

Database Roles and Users

The public Role

The dbo User Account

The guest User Account

See also

Ownership and User-Schema Separation in SQL Server

User-Schema Separation

Schema Owners and Permissions

Built-In Schemas

The dbo Schema

External Resources

See also

Authorization and Permissions in SQL Server

The Principle of Least Privilege

Role-Based Permissions

Permissions Through Procedural Code

Permission Statements

Ownership Chains

Procedural Code and Ownership Chaining

External Resources

See also

Data Encryption in SQL Server

Keys and Algorithms

External Resources

See also

CLR Integration Security in SQL Server

External Resources

See also

Application Security Scenarios in SQL Server

Common Threats

SQL Injection

Elevation of Privilege

Probing and Intelligent Observation

Authentication

Passwords

In This Section

See also

Managing Permissions with Stored Procedures in SQL Server

Stored Procedure Benefits

Stored Procedure Execution

Best Practices

External Resources

See also

Writing Secure Dynamic SQL in SQL Server

Anatomy of a SQL Injection Attack

Dynamic SQL Strategies

EXECUTE AS

Certificate Signing

Cross Database Access

External Resources

See also

Signing Stored Procedures in SQL Server

Creating Certificates

External Resources

See also

Customizing Permissions with Impersonation in SQL Server

Context Switching with the EXECUTE AS Statement

Granting Permissions with the EXECUTE AS Clause

Using EXECUTE AS with REVERT

Specifying the Execution Context

See also

Granting Row-Level Permissions in SQL Server

Implementing Row-level Filtering

See also

Creating Application Roles in SQL Server

Application Role Features

The Principle of Least Privilege

Application Role Enhancements

Application Role Alternatives

External Resources

See also

Enabling Cross-Database Access in SQL Server

Off By Default

Enabling Cross-database Ownership Chaining

Dynamic SQL

External Resources

See also

SQL Server Express Security

Network Access

User Instances

External Resources

See also

SQL Server Data Types and ADO.NET

In This Section

Reference

See also

SqlTypes and the DataSet

Example

See also

Handling Null Values

Nulls and Three-Valued Logic

Nulls and SqlBoolean

Understanding the ANSI_NULLS Option

Assigning Null Values

Multiple Column (Row) Assignment

Assigning Null Values

Example

Comparing Null Values with SqlTypes and CLR Types

See also

Comparing GUID and uniqueidentifier Values

Working with SqlGuid Values

Comparing GUID Values

See also

Date and Time Data

Date/Time Data Types Introduced in SQL Server 2008

Date Format and Date Order

Date/Time Data Types and Parameters

SqlParameter Properties

Creating Parameters

Date Example

Time Example

Datetime2 Example

DateTimeOffSet Example

AddWithValue

Retrieving Date and Time Data

Specifying Date and Time Values as Literals

Resources in SQL Server 2008 Books Online

See also

Large UDTs

Retrieving UDT Schemas Using GetSchema

GetSchemaTable Column Values for UDTs

SqlDataReader Considerations

Specifying SqlParameters

Retrieving Data Example

See also

XML Data in SQL Server

In This Section

See also

SQL XML Column Values

Example

See also

Specifying XML Values as Parameters

Example

See also

SQL Server Binary and Large-Value Data

In This Section

See also

Modifying Large-Value (max) Data in ADO.NET

Large-Value Type Restrictions

Working with Large-Value Types in Transact-SQL

Updating Data Using UPDATE .WRITE

Example

Working with Large-Value Types in ADO.NET

Using GetSqlBytes to Retrieve Data

Using GetSqlChars to Retrieve Data

Using GetSqlBinary to Retrieve Data

Using GetBytes to Retrieve Data

Using GetValue to Retrieve Data

Converting from Large Value Types to CLR Types

Example

Using Large Value Type Parameters

Example

See also

FILESTREAM Data

SqlClient Support for FILESTREAM

Creating the SQL Server Table

Example: Reading, Overwriting, and Inserting FILESTREAM Data

Resources in SQL Server Books Online

See also

Inserting an Image from a File

Example

See also

SQL Server Data Operations in ADO.NET

In This Section

See also

Bulk Copy Operations in SQL Server

In This Section

See also

Bulk Copy Example Setup

Table Setup

See also

Single Bulk Copy Operations

Example

Performing a Bulk Copy Operation Using Transact-SQL and the Command Class

See also

Multiple Bulk Copy Operations

See also

Transaction and Bulk Copy Operations

Performing a Non-transacted Bulk Copy Operation

Performing a Dedicated Bulk Copy Operation in a Transaction

Using Existing Transactions

See also

Multiple Active Result Sets (MARS)

In This Section

Related Sections

See also

Enabling Multiple Active Result Sets

Enabling and Disabling MARS in the Connection String

Special Considerations When Using MARS

Statement Interleaving

MARS Session Cache

Thread Safety

Connection Pooling

SQL Server Batch Execution Environment

Parallel Execution

Detecting MARS Support

See also

Using Multiple Commands with MARS

Example

Reading and Updating Data with MARS

Example

See also

Asynchronous Operations

In This Section

See also

Windows Applications Using Callbacks

Example

See also

ASP.NET Applications Using Wait Handles

Example: Wait (Any) Model

Example: Wait (All) Model

See also

Polling in Console Applications

Example

See also

Table-Valued Parameters

Passing Multiple Rows in Previous Versions of SQL Server

Creating Table-Valued Parameter Types

Modifying Data with Table-Valued Parameters (Transact-SQL)

Limitations of Table-Valued Parameters

Configuring a SqlParameter Example

Passing a Table-Valued Parameter to a Stored Procedure

Passing a Table-Valued Parameter to a Parameterized SQL Statement

Streaming Rows with a DataReader

See also

SQL Server Features and ADO.NET

In This Section

See also

Enumerating Instances of SQL Server (ADO.NET)

Retrieving an Enumerator Instance

Enumeration Limitations

Example

See also

Provider Statistics for SQL Server

Statistical Values Available

Retrieving a Value

Retrieving All Values

See also

SQL Server Express User Instances

User Instance Capabilities

Enabling User Instances

Connecting to a User Instance

Using the |DataDirectory| Substitution String

Lifetime of a User Instance Connection

How User Instances Work

User Instance Scenarios

See also

Database Mirroring in SQL Server

Specifying the Failover Partner in the Connection String

Retrieving the Current Server Name

SqlClient Mirroring Behavior

Database Mirroring Resources

See also

SQL Server Common Language Runtime Integration

In This Section

See also

Introduction to SQL Server CLR Integration

Enabling CLR Integration

Deploying a CLR Assembly

CLR Integration Security

Debugging a CLR Assembly

See also

CLR User-Defined Functions

See also

CLR User-Defined Types

See also

CLR Stored Procedures

See also

CLR Triggers

See also

The Context Connection

See also

SQL Server In-Process-Specific Behavior of ADO.NET

See also

Query Notifications in SQL Server

In This Section

Reference

See also

Enabling Query Notifications

Query Notifications Requirements

Enabling Query Notifications to Run Sample Code

Query Notifications Permissions

Choosing a Notification Object

Using SqlDependency

Using SqlNotificationRequest

See also

SqlDependency in an ASP.NET Application

About the Sample Application

Creating the Sample Application

Testing the Application

See also

Detecting Changes with SqlDependency

Security Considerations

Example

See also

SqlCommand Execution with a SqlNotificationRequest

Creating the Notification Request

Example

See also

Snapshot Isolation in SQL Server

Understanding Snapshot Isolation and Row Versioning

Managing Concurrency with Isolation Levels

Snapshot Isolation Level Extensions

How Snapshot Isolation and Row Versioning Work

Working with Snapshot Isolation in ADO.NET

Example

Example

Using Lock Hints with Snapshot Isolation

See also

SqlClient Support for High Availability, Disaster Recovery

Connecting With MultiSubnetFailover

Upgrading to Use Multi-Subnet Clusters from Database Mirroring

Specifying Application Intent

Read-Only Routing

See also

SqlClient Support for LocalDB

Remarks

Programmatically Create a Named Instance

See also

LINQ to SQL

In This Section

Related Sections

Getting Started

Next Steps

See also

What You Can Do With LINQ to SQL

Selecting

Inserting

Updating

Deleting

See also

Typical Steps for Using LINQ to SQL

Creating the Object Model

1. Select a tool to create the model.

2. Select the kind of code you want to generate.

3. Refine the code file to reflect the needs of your application.

Using the Object Model

1. Create queries to retrieve information from the database.

2. Override default behaviors for Insert, Update, and Delete.

3. Set appropriate options to detect and report concurrency conflicts.

4. Establish an inheritance hierarchy.

5. Provide an appropriate user interface.

6. Debug and test your application.

See also

Get the sample databases for ADO.NET code samples

Get the Northwind sample database for SQL Server

Get the Northwind sample database for Microsoft Access

Get the AdventureWorks sample database for SQL Server

Get SQL Server Management Studio

Get SQL Server Express

See also

Learning by Walkthroughs

Getting Started Walkthroughs

General

Troubleshooting

Log-On Issues

To verify or change the database log on

Protocols

To enable the Named Pipes protocol

Stopping and Restarting the Service

To stop and restart the service

See also

Walkthrough: Simple Object Model and Query (Visual Basic)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding LINQ References and Directives

To add System.Data.Linq

Mapping a Class to a Database Table

To create an entity class and map it to a database table

Designating Properties on the Class to Represent Database Columns

To represent characteristics of two database columns

Specifying the Connection to the Northwind Database

To specify the database connection

Creating a Simple Query

To create a simple query

Executing the Query

To execute the query

Next Steps

See also

Walkthrough: Querying Across Relationships (Visual Basic)

Prerequisites

Overview

Mapping Relationships across Tables

To add the Order entity class

Annotating the Customer Class

To annotate the Customer class

Creating and Running a Query across the Customer-Order Relationship

To access Order objects by using Customer objects

Creating a Strongly Typed View of Your Database

To strongly type the DataContext object

Next Steps

See also

Walkthrough: Manipulating Data (Visual Basic)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding LINQ References and Directives

To add System.Data.Linq

Adding the Northwind Code File to the Project

To add the northwind code file to the project

Setting Up the Database Connection

To set up and test the database connection

Creating a New Entity

To add a new Customer entity object

Updating an Entity

To change the name of a Customer

Deleting an Entity

To delete a row

Submitting Changes to the Database

To submit changes to the database

See also

Walkthrough: Using Only Stored Procedures (Visual Basic)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding the LINQ to SQL Assembly Reference

To add System.Data.Linq.dll

Adding the Northwind Code File to the Project

To add the northwind code file to the project

Creating a Database Connection

To create the database connection

Setting up the User Interface

To set up the user interface

To handle button clicks

Testing the Application

To test the application

Next Steps

See also

Walkthrough: Simple Object Model and Query (C#)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding LINQ References and Directives

To add System.Data.Linq

Mapping a Class to a Database Table

To create an entity class and map it to a database table

Designating Properties on the Class to Represent Database Columns

To represent characteristics of two database columns

Specifying the Connection to the Northwind Database

To specify the database connection

Creating a Simple Query

To create a simple query

Executing the Query

To execute the query

Next Steps

See also

Walkthrough: Querying Across Relationships (C#)

Prerequisites

Overview

Mapping Relationships Across Tables

To add the Order entity class

Annotating the Customer Class

To annotate the Customer class

Creating and Running a Query Across the Customer-Order Relationship

To access Order objects by using Customer objects

Creating a Strongly Typed View of Your Database

To strongly type the DataContext object

Next Steps

See also

Walkthrough: Manipulating Data (C#)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding LINQ References and Directives

To add System.Data.Linq

Adding the Northwind Code File to the Project

To add the northwind code file to the project

Setting Up the Database Connection

To set up and test the database connection

Creating a New Entity

To add a new Customer entity object

Updating an Entity

To change the name of a Customer

Deleting an Entity

To delete a row

Submitting Changes to the Database

To submit changes to the database

See also

Walkthrough: Using Only Stored Procedures (C#)

Prerequisites

Overview

Creating a LINQ to SQL Solution

To create a LINQ to SQL solution

Adding the LINQ to SQL Assembly Reference

To add System.Data.Linq.dll

Adding the Northwind Code File to the Project

To add the northwind code file to the project

Creating a Database Connection

To create the database connection

Setting up the User Interface

To set up the user interface

To handle button clicks

Testing the Application

To test the application

Next Steps

See also

Programming Guide

In This Section

Related Sections

Creating the Object Model

In This Section

Related Sections

How to: Generate the Object Model in Visual Basic or C#

Example

Example

See also

How to: Generate the Object Model as an External File

Example

Example

See also

How to: Generate Customized Code by Modifying a DBML File

Example

Example

See also

How to: Validate DBML and External Mapping Files

To validate a .dbml or XML file

Alternate Method for Supplying Schema Definition

To copy a schema definition file from a Help topic

See also

How to: Make Entities Serializable

Example

See also

How to: Customize Entity Classes by Using the Code Editor

See also

How to: Specify Database Names

To specify the name of the database

See also

How to: Represent Tables as Classes

To map a class to a database table

Example

See also

How to: Represent Columns as Class Members

To map a field or property to a database column

Example

See also

How to: Represent Primary Keys

To designate a property or field as a primary key

See also

How to: Map Database Relationships

Example

Example

See also

How to: Represent Columns as Database-Generated

To designate a field or property as representing a database-generated column

See also

How to: Represent Columns as Timestamp or Version Columns

To designate a field or property as representing a timestamp or version column

See also

How to: Specify Database Data Types

To specify text to define a data type in a T-SQL table

See also

How to: Represent Computed Columns

To represent a computed column

See also

How to: Specify Private Storage Fields

To specify the name of an underlying storage field

See also

How to: Represent Columns as Allowing Null Values

To designate a column as allowing null values

See also

How to: Map Inheritance Hierarchies

To map an inheritance hierarchy

Example

See also

How to: Specify Concurrency-Conflict Checking

Example

See also

Communicating with the Database

In This Section

See also

Communicating with the Database

In This Section

See also

How to: Connect to a Database

Example

Example

See also

How to: Directly Execute SQL Commands

Example

See also

How to: Reuse a Connection Between an ADO.NET Command and a DataContext

Example

See also

Querying the Database

In This Section

How to: Query for Information

Example

See also

How to: Retrieve Information As Read-Only

Example

See also

How to: Control How Much Related Data Is Retrieved

Example

See also

How to: Filter Related Data

Example

See also

How to: Turn Off Deferred Loading

Example

See also

How to: Directly Execute SQL Queries

Example

Example

See also

How to: Store and Reuse Queries

Example

Example

See also

How to: Handle Composite Keys in Queries

Example

Example

See also

How to: Retrieve Many Objects At Once

Example

See also

How to: Filter at the DataContext Level

Example

See also

Query Examples

In This Section

Related Sections

Aggregate Queries

In This Section

Related Sections

Return the Average Value From a Numeric Sequence

Example

Example

Example

See also

Count the Number of Elements in a Sequence

Example

Example

See also

Find the Maximum Value in a Numeric Sequence

Example

Example

Example

See also

Find the Minimum Value in a Numeric Sequence

Example

Example

Example

See also

Compute the Sum of Values in a Numeric Sequence

Example

Example

See also

Return the First Element in a Sequence

Example

Example

See also

Return Or Skip Elements in a Sequence

Example

Example

Example

See also

Sort Elements in a Sequence

Example

Example

Example

Example

Example

Example

See also

Group Elements in a Sequence

Example

Example

Example

Example

Example

Example

Example

Example

Example

See also

Eliminate Duplicate Elements from a Sequence

Example

See also

Determine if Any or All Elements in a Sequence Satisfy a Condition

Example

Example

Example

See also

Concatenate Two Sequences

Example

Example

See also

Return the Set Difference Between Two Sequences

Example

See also

Return the Set Intersection of Two Sequences

Example

See also

Return the Set Union of Two Sequences

Example

See also

Convert a Sequence to an Array

Example

See also

Convert a Sequence to a Generic List

Example

See also

Convert a Type to a Generic IEnumerable

Example

See also

Formulate Joins and Cross-Product Queries

Example

Example

Example

Example

Example

Example

Example

Example

Example

Example

Example

See also

Formulate Projections

Example

Example

Example

Example

Example

Example

Example

Example

Example

See also

How to: Insert Rows Into the Database

To insert a row into the database

Example

See also

How to: Update Rows in the Database

To update a row in the database

Example

See also

How to: Delete Rows From the Database

To delete a row in the database

Example

Example

See also

How to: Submit Changes to the Database

Example

See also

How to: Bracket Data Submissions by Using Transactions

Example

See also

How to: Dynamically Create a Database

Example

Example

Example

See also

How to: Manage Change Conflicts

In This Section

Related Sections

How to: Detect and Resolve Conflicting Submissions

Example

See also

How to: Specify When Concurrency Exceptions are Thrown

Example

See also

How to: Specify Which Members are Tested for Concurrency Conflicts

To always use this member for detecting conflicts

To never use this member for detecting conflicts

To use this member for detecting conflicts only when the application has changed the value of the member

Example

See also

How to: Retrieve Entity Conflict Information

Example

See also

How to: Retrieve Member Conflict Information

Example

See also

How to: Resolve Conflicts by Retaining Database Values

Example

See also

How to: Resolve Conflicts by Overwriting Database Values

Example

See also

How to: Resolve Conflicts by Merging with Database Values

Example

See also

Debugging Support

In This Section

See also

How to: Display Generated SQL

Example

See also

How to: Display a ChangeSet

Example

See also

How to: Display LINQ to SQL Commands

Example

See also

Troubleshooting

Unsupported Standard Query Operators

Memory Issues

File Names and SQLMetal

Class Library Projects

Cascade Delete

Expression Not Queryable

DuplicateKeyException

String Concatenation Exceptions

Skip and Take Exceptions in SQL Server 2000

GroupBy InvalidOperationException

OnCreated() Partial Method

See also

Background Information

In This Section

Related Sections

ADO.NET and LINQ to SQL

Connections

Transactions

Direct SQL Commands

Parameters

See also

Analyzing LINQ to SQL Source Code

See also

Customizing Insert, Update, and Delete Operations

In This Section

Customizing Operations: Overview

Loading Options

Partial Methods

Stored Procedures and User-Defined Functions

See also

Insert, Update, and Delete Operations

See also

Responsibilities of the Developer In Overriding Default Behavior

See also

Adding Business Logic By Using Partial Methods

Example

Description

Code

Example

Description

Code

See also

Data Binding

Underlying Principle

Operation

IListSource Implementation

Specialized Collections

Generic SortableBindingList

Generic DataBindingList

Binding to EntitySets

Adding a Sorting Feature

Caching

Cancellation

Troubleshooting

See also

Inheritance Support

See also

Local Method Calls

Example 1

See also

N-Tier and Remote Applications with LINQ to SQL

Additional Resources

See also

LINQ to SQL N-Tier with ASP.NET

See also

LINQ to SQL N-Tier with Web Services

Setting up LINQ to SQL on the Middle Tier

Defining the Serializable Types

Retrieving and Inserting Data

Tracking Changes for Updates and Deletes

See also

Implementing Business Logic (LINQ to SQL)

How LINQ to SQL Invokes Your Business Logic

A Closer Look at the Extensibility Points

See also

Data Retrieval and CUD Operations in N-Tier Applications (LINQ to SQL)

Retrieving Data

Client Method Call

Middle Tier Implementation

Inserting Data

Middle Tier Implementation

Deleting Data

Updating Data

Optimistic concurrency with timestamps

With Subset of Original Values

With Complete Entities

Expected Entity Members

State

See also

Object Identity

Examples

Object Caching Example 1

Object Caching Example 2

See also

The LINQ to SQL Object Model

LINQ to SQL Entity Classes and Database Tables

Example

LINQ to SQL Class Members and Database Columns

Example

LINQ to SQL Associations and Database Foreign-key Relationships

Example

LINQ to SQL Methods and Database Stored Procedures

Example

See also

Object States and Change-Tracking

Object States

Inserting Objects

Deleting Objects

Updating Objects

See also

Optimistic Concurrency: Overview

Example

Conflict Detection and Resolution Checklist

LINQ to SQL Types That Support Conflict Discovery and Resolution

See also

Query Concepts

In This Section

Related Sections

LINQ to SQL Queries

See also

Querying Across Relationships

See also

Remote vs. Local Execution

Remote Execution

Local Execution

Comparison

Queries Against Unordered Sets

See also

Deferred versus Immediate Loading

See also

Retrieving Objects from the Identity Cache

Example

See also

Security in LINQ to SQL

Access Control and Authentication

Mapping and Schema Information

Connection Strings

See also

Serialization

Overview

Definitions

Code Example

How to Serialize the Entities

Self-Recursive Relationships

See also

Stored Procedures

In This Section

Related Sections

How to: Return Rowsets

Example

See also

How to: Use Stored Procedures that Take Parameters

Example

Example

See also

How to: Use Stored Procedures Mapped for Multiple Result Shapes

Example

Example

See also

How to: Use Stored Procedures Mapped for Sequential Result Shapes

Example

Example

See also

Customizing Operations By Using Stored Procedures

Example

Description

Code

Example

Description

Code

Example

Description

Code

See also

Customizing Operations by Using Stored Procedures Exclusively

Example

Description

Code

See also

Transaction Support

Explicit Local Transaction

Explicit Distributable Transaction

Implicit Transaction

See also

SQL-CLR Type Mismatches

Data Types

Missing Counterparts

Multiple Mappings

User-defined Types

Expression Semantics

Null Semantics

Type Conversion and Promotion

Collation

Operator and Function Differences

Type Casting

Performance Issues

See also

SQL-CLR Custom Type Mappings

Customization with SQLMetal or O/R Designer

Incorporating Database Changes

See also

User-Defined Functions

In This Section

How to: Use Scalar-Valued User-Defined Functions

Example

See also

How to: Use Table-Valued User-Defined Functions

Example

Example

See also

How to: Call User-Defined Functions Inline

Example

See also

Reference

In This Section

Related Sections

Reference

In This Section

Related Sections

Data Types and Functions

See also

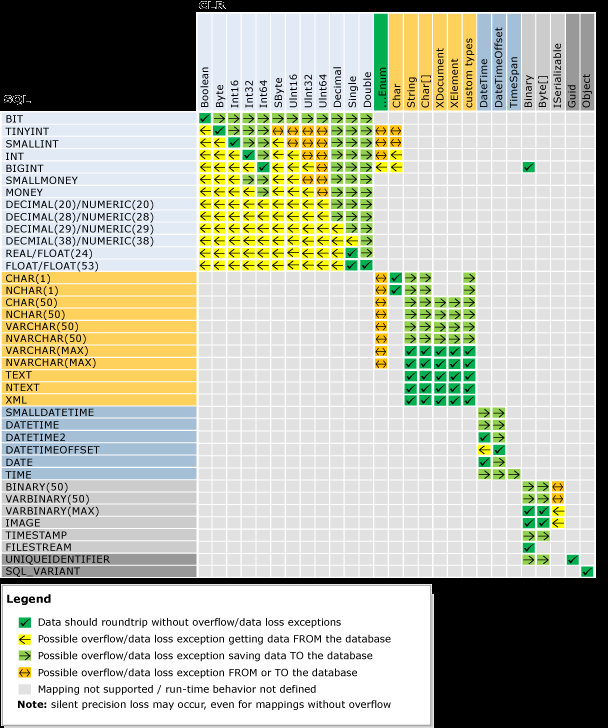

SQL-CLR Type Mapping

Default Type Mapping

Type Mapping Run-time Behavior Matrix

Custom Type Mapping

Behavior Differences Between CLR and SQL Execution

Enum Mapping

Numeric Mapping

Decimal and Money Types

Text and XML Mapping

XML Types

Custom Types

Date and Time Mapping

System.Datetime

System.TimeSpan

Binary Mapping

SQL Server FILESTREAM

Binary Serialization

Miscellaneous Mapping

See also

Basic Data Types

Casting

Equality Operators

See also

Boolean Data Types

See also

Null Semantics

See also

Numeric and Comparison Operators

Supported Operators

See also

Sequence Operators

Differences from .NET

See also

System.Convert Methods

See also

System.DateTime Methods

Supported System.DateTime Members

Members Not Supported by LINQ to SQL

Method Translation Example

SQLMethods Date and Time Methods

See also

System.Math Methods

Differences from .NET

See also

System.Object Methods

Differences from .NET

See also

System.String Methods

Unsupported System.String Methods in General

Unsupported System.String Static Methods

Unsupported System.String Non-static Methods

Differences from .NET

See also

System.TimeSpan Methods

Previous Limitations

Supported System.TimeSpan member support

Addition and Subtraction

See also

System.DateTimeOffset Methods

SQLMethods Date and Time Methods

See also

Attribute-Based Mapping

DatabaseAttribute Attribute

TableAttribute Attribute

ColumnAttribute Attribute

AssociationAttribute Attribute

InheritanceMappingAttribute Attribute

FunctionAttribute Attribute

ParameterAttribute Attribute

ResultTypeAttribute Attribute

DataAttribute Attribute

See also

Code Generation in LINQ to SQL

DBML Extractor

Code Generator

XML Schema Definition File

Sample DBML File

See also

External Mapping

Requirements

XML Schema Definition File

See also

Frequently Asked Questions

Cannot Connect

Changes to Database Lost

Database Connection: Open How Long?

Updating Without Querying

Unexpected Query Results

Unexpected Stored Procedure Results

Serialization Errors

Multiple DBML Files

Avoiding Explicit Setting of Database-Generated Values on Insert or Update

Multiple DataLoadOptions

Errors Using SQL Compact 3.5

Errors in Inheritance Relationships

Provider Model

SQL-Injection Attacks

Changing Read-only Flag in DBML Files

APTCA

Mapping Data from Multiple Tables

Connection Pooling

Second DataContext Is Not Updated

Cannot Call SubmitChanges in Read-only Mode

See also

SQL Server Compact and LINQ to SQL

Characteristics of SQL Server Compact in Relation to LINQ to SQL

Feature Set

See also

Standard Query Operator Translation

Operator Support

Concat

Intersect, Except, Union

Take, Skip

Operators with No Translation

Expression Translation

Null semantics

Aggregates

Entity Arguments

Equatable / Comparable Arguments

Visual Basic Function Translation

Inheritance Support

Inheritance Mapping Restrictions

Inheritance in Queries

SQL Server 2008 Support

Unsupported Query Operators

SQL Server 2005 Support

SQL Server 2000 Support

Cross Apply and Outer Apply Operators

text / ntext

Behavior Triggered by Nested Queries

Skip and Take Operators

Object Materialization

See also

Samples

In This Section

See also

Source/Reference

ADO.NET SQL Server

This section describes features and behaviors that are specific to the .NET Framework Data Provider for SQL Server (System.Data.SqlClient).

System.Data.SqlClient provides access to versions of SQL Server, which encapsulates database-specific protocols. The functionality of the data provider is designed to be similar to that of the .NET Framework data providers for OLE DB, ODBC, and Oracle. System.Data.SqlClient includes a tabular data stream (TDS) parser to communicate directly with SQL Server.

Note

To use the .NET Framework Data Provider for SQL Server, an application must reference the System.Data.SqlClient namespace.

In This Section

SQL Server Security

Provides an overview of SQL Server security features, and application scenarios for creating secure ADO.NET applications that target SQL Server.

SQL Server Data Types and ADO.NET

Describes how to work with SQL Server data types and how they interact with .NET Framework data types.

SQL Server Binary and Large-Value Data

Describes how to work with large value data in SQL Server.

SQL Server Data Operations in ADO.NET

Describes how to work with data in SQL Server. Contains sections about bulk copy operations, MARS, asynchronous operations, and table-valued parameters.

SQL Server Features and ADO.NET

Describes SQL Server features that are useful for ADO.NET application developers.

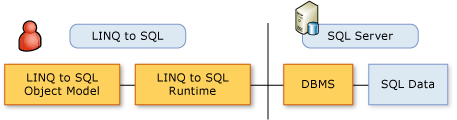

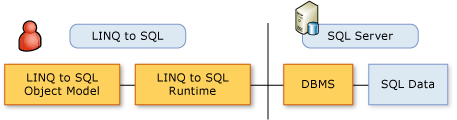

LINQ to SQL

Describes the basic building blocks, processes, and techniques required for creating LINQ to SQL applications.

For complete documentation of the SQL Server Database Engine, see SQL Server Books Online for the version of SQL Server you are using.

See also

- Securing ADO.NET Applications

- Data Type Mappings in ADO.NET

- DataSets, DataTables, and DataViews

- Retrieving and Modifying Data in ADO.NET

- ADO.NET Managed Providers and DataSet Developer Center

SQL Server Security

SQL Server has many features that support creating secure database applications.

Common security considerations, such as data theft or vandalism, apply regardless of the version of SQL Server you are using. Data integrity should also be considered as a security issue. If data is not protected, it is possible that it could become worthless if ad hoc data manipulation is permitted and the data is inadvertently or maliciously modified with incorrect values or deleted entirely. In addition, there are often legal requirements that must be adhered to, such as the correct storage of confidential information. Storing some kinds of personal data is proscribed entirely, depending on the laws that apply in a particular jurisdiction.

Each version of SQL Server has different security features, as does each version of Windows, with later versions having enhanced functionality over earlier ones. It is important to understand that security features alone cannot guarantee a secure database application. Each database application is unique in its requirements, execution environment, deployment model, physical location, and user population. Some applications that are local in scope may need only minimal security whereas other local applications or applications deployed over the Internet may require stringent security measures and ongoing monitoring and evaluation.

The security requirements of a SQL Server database application should be considered at design time, not as an afterthought. Evaluating threats early in the development cycle gives you the opportunity to mitigate potential damage wherever a vulnerability is detected.

Even if the initial design of an application is sound, new threats may emerge as the system evolves. By creating multiple lines of defense around your database, you can minimize the damage inflicted by a security breach. Your first line of defense is to reduce the attack surface area by never to granting more permissions than are absolutely necessary.

The topics in this section briefly describe the security features in SQL Server that are relevant for developers, with links to relevant topics in SQL Server Books Online and other resources that provide more detailed coverage.

In This Section

Overview of SQL Server Security

Describes the architecture and security features of SQL Server.

Application Security Scenarios in SQL Server

Contains topics discussing various application security scenarios for ADO.NET and SQL Server applications.

SQL Server Express Security

Describes security considerations for SQL Server Express.

Related Sections

Security Center for SQL Server Database Engine and Azure SQL Database

Describes security considerations for SQL Server and Azure SQL Database.

Security Considerations for a SQL Server Installation

Describes security concerns to consider before installing SQL Server.

See also

Overview of SQL Server Security

A defense-in-depth strategy, with overlapping layers of security, is the best way to counter security threats. SQL Server provides a security architecture that is designed to allow database administrators and developers to create secure database applications and counter threats. Each version of SQL Server has improved on previous versions of SQL Server with the introduction of new features and functionality. However, security does not ship in the box. Each application is unique in its security requirements. Developers need to understand which combination of features and functionality are most appropriate to counter known threats, and to anticipate threats that may arise in the future.

A SQL Server instance contains a hierarchical collection of entities, starting with the server. Each server contains multiple databases, and each database contains a collection of securable objects. Every SQL Server securable has associated permissions that can be granted to a principal, which is an individual, group or process granted access to SQL Server. The SQL Server security framework manages access to securable entities through authentication and authorization.

-

Authentication is the process of logging on to SQL Server by which a principal requests access by submitting credentials that the server evaluates. Authentication establishes the identity of the user or process being authenticated.

-

Authorization is the process of determining which securable resources a principal can access, and which operations are allowed for those resources.

The topics in this section cover SQL Server security fundamentals, providing links to the complete documentation in the relevant version of SQL Server Books Online.

In This Section

Authentication in SQL Server

Describes logins and authentication in SQL Server and provides links to additional resources.

Server and Database Roles in SQL Server

Describes fixed server and database roles, custom database roles, and built-in accounts and provides links to additional resources.

Ownership and User-Schema Separation in SQL Server

Describes object ownership and user-schema separation and provides links to additional resources.

Authorization and Permissions in SQL Server

Describes granting permissions using the principle of least privilege and provides links to additional resources.

Data Encryption in SQL Server

Describes data encryption options in SQL Server and provides links to additional resources.

CLR Integration Security in SQL Server

Provides links to CLR integration security resources.

See also

- Securing ADO.NET Applications

- SQL Server Security

- Application Security Scenarios in SQL Server

- ADO.NET Managed Providers and DataSet Developer Center

Authentication in SQL Server

SQL Server supports two authentication modes, Windows authentication mode and mixed mode.

-

Windows authentication is the default, and is often referred to as integrated security because this SQL Server security model is tightly integrated with Windows. Specific Windows user and group accounts are trusted to log in to SQL Server. Windows users who have already been authenticated do not have to present additional credentials.

-

Mixed mode supports authentication both by Windows and by SQL Server. User name and password pairs are maintained within SQL Server.

Important

We recommend using Windows authentication wherever possible. Windows authentication uses a series of encrypted messages to authenticate users in SQL Server. When SQL Server logins are used, SQL Server login names and encrypted passwords are passed across the network, which makes them less secure.

With Windows authentication, users are already logged onto Windows and do not have to log on separately to SQL Server. The following SqlConnection.ConnectionString specifies Windows authentication without requiring users to provide a user name or password.

"Server=MSSQL1;Database=AdventureWorks;Integrated Security=true;

Note

Logins are distinct from database users. You must map logins or Windows groups to database users or roles in a separate operation. You then grant permissions to users or roles to access database objects.

Authentication Scenarios

Windows authentication is usually the best choice in the following situations:

-

There is a domain controller.

-

The application and the database are on the same computer.

-

You are using an instance of SQL Server Express or LocalDB.

SQL Server logins are often used in the following situations:

-

If you have a workgroup.

-

Users connect from different, non-trusted domains.

-

Internet applications, such as ASP.NET.

Note

Specifying Windows authentication does not disable SQL Server logins. Use the ALTER LOGIN DISABLE Transact-SQL statement to disable highly-privileged SQL Server logins.

Login Types

SQL Server supports three types of logins:

-

A local Windows user account or trusted domain account. SQL Server relies on Windows to authenticate the Windows user accounts.

-

Windows group. Granting access to a Windows group grants access to all Windows user logins that are members of the group.

-

SQL Server login. SQL Server stores both the username and a hash of the password in the master database, by using internal authentication methods to verify login attempts.

Note

SQL Server provides logins created from certificates or asymmetric keys that are used only for code signing. They cannot be used to connect to SQL Server.

Mixed Mode Authentication

If you must use mixed mode authentication, you must create SQL Server logins, which are stored in SQL Server. You then have to supply the SQL Server user name and password at run time.

Important

SQL Server installs with a SQL Server login named sa (an abbreviation of "system administrator"). Assign a strong password to the sa login and do not use the sa login in your application. The sa login maps to the sysadmin fixed server role, which has irrevocable administrative credentials on the whole server. There are no limits to the potential damage if an attacker gains access as a system administrator. All members of the Windows BUILTIN\Administrators group (the local administrator's group) are members of the sysadmin role by default, but can be removed from that role.

SQL Server provides Windows password policy mechanisms for SQL Server logins when it is running on Windows Server 2003 or later versions. Password complexity policies are designed to deter brute force attacks by increasing the number of possible passwords. SQL Server can apply the same complexity and expiration policies used in Windows Server 2003 to passwords used inside SQL Server.

Important

Concatenating connection strings from user input can leave you vulnerable to a connection string injection attack. Use the SqlConnectionStringBuilder to create syntactically valid connection strings at run time. For more information, see Connection String Builders.

External Resources

For more information, see the following resources.

| Resource | Description |

|---|---|

| Principals | Describes logins and other security principals in SQL Server. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- Connecting to a Data Source

- Connection Strings

- ADO.NET Managed Providers and DataSet Developer Center

Server and Database Roles in SQL Server

All versions of SQL Server use role-based security, which allows you to assign permissions to a role, or group of users, instead of to individual users. Fixed server and fixed database roles have a fixed set of permissions assigned to them.

Fixed Server Roles

Fixed server roles have a fixed set of permissions and server-wide scope. They are intended for use in administering SQL Server and the permissions assigned to them cannot be changed. Logins can be assigned to fixed server roles without having a user account in a database.

Important

The sysadmin fixed server role encompasses all other roles and has unlimited scope. Do not add principals to this role unless they are highly trusted. sysadmin role members have irrevocable administrative privileges on all server databases and resources.

Be selective when you add users to fixed server roles. For example, the bulkadmin role allows users to insert the contents of any local file into a table, which could jeopardize data integrity. See SQL Server Books Online for the complete list of fixed server roles and permissions.

Fixed Database Roles

Fixed database roles have a pre-defined set of permissions that are designed to allow you to easily manage groups of permissions. Members of the db_owner role can perform all configuration and maintenance activities on the database.

For more information about SQL Server predefined roles, see the following resources.

| Resource | Description |

|---|---|

| Server-Level Roles | Describes fixed server roles and the permissions associated with them in SQL Server. |

| Database-Level Roles | Describes fixed database roles and the permissions associated with them |

Database Roles and Users

Logins must be mapped to database user accounts in order to work with database objects. Database users can then be added to database roles, inheriting any permission sets associated with those roles. All permissions can be granted.

You must also consider the public role, the dbo user account, and the guest account when you design security for your application.

The public Role

The public role is contained in every database, which includes system databases. It cannot be dropped and you cannot add or remove users from it. Permissions granted to the public role are inherited by all other users and roles because they belong to the public role by default. Grant public only the permissions you want all users to have.

The dbo User Account

The dbo, or database owner, is a user account that has implied permissions to perform all activities in the database. Members of the sysadmin fixed server role are automatically mapped to dbo.

Note

dbo is also the name of a schema, as discussed in Ownership and User-Schema Separation in SQL Server.

The dbo user account is frequently confused with the db_owner fixed database role. The scope of db_owner is a database; the scope of sysadmin is the whole server. Membership in the db_owner role does not confer dbo user privileges.

The guest User Account

After a user has been authenticated and allowed to log in to an instance of SQL Server, a separate user account must exist in each database the user has to access. Requiring a user account in each database prevents users from connecting to an instance of SQL Server and accessing all the databases on a server. The existence of a guest user account in the database circumvents this requirement by allowing a login without a database user account to access a database.

The guest account is a built-in account in all versions of SQL Server. By default, it is disabled in new databases. If it is enabled, you can disable it by revoking its CONNECT permission by executing the Transact-SQL REVOKE CONNECT FROM GUEST statement.

Important

Avoid using the guest account; all logins without their own database permissions obtain the database permissions granted to this account. If you must use the guest account, grant it minimum permissions.

For more information about SQL Server logins, users and roles, see the following resources.

| Resource | Description |

|---|---|

| Getting Started with Database Engine Permissions | Contains links to topics that describe principals, roles, credentials, securables and permissions. |

| Principals | Describes principals and contains links to topics that describe server and database roles. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- Authentication in SQL Server

- Ownership and User-Schema Separation in SQL Server

- Authorization and Permissions in SQL Server

- ADO.NET Managed Providers and DataSet Developer Center

Ownership and User-Schema Separation in SQL Server

A core concept of SQL Server security is that owners of objects have irrevocable permissions to administer them. You cannot remove privileges from an object owner, and you cannot drop users from a database if they own objects in it.

User-Schema Separation

User-schema separation allows for more flexibility in managing database object permissions. A schema is a named container for database objects, which allows you to group objects into separate namespaces. For example, the AdventureWorks sample database contains schemas for Production, Sales, and HumanResources.

The four-part naming syntax for referring to objects specifies the schema name.

Server.Database.DatabaseSchema.DatabaseObject

Schema Owners and Permissions

Schemas can be owned by any database principal, and a single principal can own multiple schemas. You can apply security rules to a schema, which are inherited by all objects in the schema. Once you set up access permissions for a schema, those permissions are automatically applied as new objects are added to the schema. Users can be assigned a default schema, and multiple database users can share the same schema.

By default, when developers create objects in a schema, the objects are owned by the security principal that owns the schema, not the developer. Object ownership can be transferred with ALTER AUTHORIZATION Transact-SQL statement. A schema can also contain objects that are owned by different users and have more granular permissions than those assigned to the schema, although this is not recommended because it adds complexity to managing permissions. Objects can be moved between schemas, and schema ownership can be transferred between principals. Database users can be dropped without affecting schemas.

Built-In Schemas

SQL Server ships with ten pre-defined schemas that have the same names as the built-in database users and roles. These exist mainly for backward compatibility. You can drop the schemas that have the same names as the fixed database roles if you do not need them. You cannot drop the following schemas:

-

dbo

-

guest

-

sys

-

INFORMATION_SCHEMA

If you drop them from the model database, they will not appear in new databases.

Note

The sys and INFORMATION_SCHEMA schemas are reserved for system objects. You cannot create objects in these schemas and you cannot drop them.

The dbo Schema

The dbo schema is the default schema for a newly created database. The dbo schema is owned by the dbo user account. By default, users created with the CREATE USER Transact-SQL command have dbo as their default schema.

Users who are assigned the dbo schema do not inherit the permissions of the dbo user account. No permissions are inherited from a schema by users; schema permissions are inherited by the database objects contained in the schema.

Note

When database objects are referenced by using a one-part name, SQL Server first looks in the user's default schema. If the object is not found there, SQL Server looks next in the dbo schema. If the object is not in the dbo schema, an error is returned.

External Resources

For more information on object ownership and schemas, see the following resources.

| Resource | Description |

|---|---|

| User-Schema Separation | Describes the changes introduced by user-schema separation. Includes new behavior, its impact on ownership, catalog views, and permissions. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- Authentication in SQL Server

- Server and Database Roles in SQL Server

- Authorization and Permissions in SQL Server

- ADO.NET Managed Providers and DataSet Developer Center

Authorization and Permissions in SQL Server

When you create database objects, you must explicitly grant permissions to make them accessible to users. Every securable object has permissions that can be granted to a principal using permission statements.

The Principle of Least Privilege

Developing an application using a least-privileged user account (LUA) approach is an important part of a defensive, in-depth strategy for countering security threats. The LUA approach ensures that users follow the principle of least privilege and always log on with limited user accounts. Administrative tasks are broken out using fixed server roles, and the use of the sysadmin fixed server role is severely restricted.

Always follow the principle of least privilege when granting permissions to database users. Grant the minimum permissions necessary to a user or role to accomplish a given task.

Important

Developing and testing an application using the LUA approach adds a degree of difficulty to the development process. It is easier to create objects and write code while logged on as a system administrator or database owner than it is using a LUA account. However, developing applications using a highly privileged account can obfuscate the impact of reduced functionality when least privileged users attempt to run an application that requires elevated permissions in order to function correctly. Granting excessive permissions to users in order to reacquire lost functionality can leave your application vulnerable to attack. Designing, developing and testing your application logged on with a LUA account enforces a disciplined approach to security planning that eliminates unpleasant surprises and the temptation to grant elevated privileges as a quick fix. You can use a SQL Server login for testing even if your application is intended to deploy using Windows authentication.

Role-Based Permissions

Granting permissions to roles rather than to users simplifies security administration. Permission sets that are assigned to roles are inherited by all members of the role. It is easier to add or remove users from a role than it is to recreate separate permission sets for individual users. Roles can be nested; however, too many levels of nesting can degrade performance. You can also add users to fixed database roles to simplify assigning permissions.

You can grant permissions at the schema level. Users automatically inherit permissions on all new objects created in the schema; you do not need to grant permissions as new objects are created.

Permissions Through Procedural Code

Encapsulating data access through modules such as stored procedures and user-defined functions provides an additional layer of protection around your application. You can prevent users from directly interacting with database objects by granting permissions only to stored procedures or functions while denying permissions to underlying objects such as tables. SQL Server achieves this by ownership chaining.

Permission Statements

The three Transact-SQL permission statements are described in the following table.

| Permission Statement | Description |

|---|---|

| GRANT | Grants a permission. |

| REVOKE | Revokes a permission. This is the default state of a new object. A permission revoked from a user or role can still be inherited from other groups or roles to which the principal is assigned. |

| DENY | DENY revokes a permission so that it cannot be inherited. DENY takes precedence over all permissions, except DENY does not apply to object owners or members of sysadmin. If you DENY permissions on an object to the public role it is denied to all users and roles except for object owners and sysadmin members. |

- The GRANT statement can assign permissions to a group or role that can be inherited by database users. However, the DENY statement takes precedence over all other permission statements. Therefore, a user who has been denied a permission cannot inherit it from another role.

Note

Members of the sysadmin fixed server role and object owners cannot be denied permissions.

Ownership Chains

SQL Server ensures that only principals that have been granted permission can access objects. When multiple database objects access each other, the sequence is known as a chain. When SQL Server is traversing the links in the chain, it evaluates permissions differently than it would if it were accessing each item separately. When an object is accessed through a chain, SQL Server first compares the object's owner to the owner of the calling object (the previous link in the chain). If both objects have the same owner, permissions on the referenced object are not checked. Whenever an object accesses another object that has a different owner, the ownership chain is broken and SQL Server must check the caller's security context.

Procedural Code and Ownership Chaining

Suppose that a user is granted execute permissions on a stored procedure that selects data from a table. If the stored procedure and the table have the same owner, the user doesn't need to be granted any permissions on the table and can even be denied permissions. However, if the stored procedure and the table have different owners, SQL Server must check the user's permissions on the table before allowing access to the data.

Note

Ownership chaining does not apply in the case of dynamic SQL statements. To call a procedure that executes an SQL statement, the caller must be granted permissions on the underlying tables, leaving your application vulnerable to SQL Injection attack. SQL Server provides new mechanisms, such as impersonation and signing modules with certificates, that do not require granting permissions on the underlying tables. These can also be used with CLR stored procedures.

External Resources

For more information, see the following resources.

| Resource | Description |

|---|---|

| Permissions | Contains topics describing permissions hierarchy, catalog views, and permissions of fixed server and database roles. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- Authentication in SQL Server

- Server and Database Roles in SQL Server

- Ownership and User-Schema Separation in SQL Server

- ADO.NET Managed Providers and DataSet Developer Center

Data Encryption in SQL Server

SQL Server provides functions to encrypt and decrypt data using a certificate, asymmetric key, or symmetric key. It manages all of these in an internal certificate store. The store uses an encryption hierarchy that secures certificates and keys at one level with the layer above it in the hierarchy. This feature area of SQL Server is called Secret Storage.

The fastest mode of encryption supported by the encryption functions is symmetric key encryption. This mode is suitable for handling large volumes of data. The symmetric keys can be encrypted by certificates, passwords or other symmetric keys.

Keys and Algorithms

SQL Server supports several symmetric key encryption algorithms, including DES, Triple DES, RC2, RC4, 128-bit RC4, DESX, 128-bit AES, 192-bit AES, and 256-bit AES. The algorithms are implemented using the Windows Crypto API.

Within the scope of a database connection, SQL Server can maintain multiple open symmetric keys. An open key is retrieved from the store and is available for decrypting data. When a piece of data is decrypted, there is no need to specify the symmetric key to use. Each encrypted value contains the key identifier (key GUID) of the key used to encrypt it. The engine matches the encrypted byte stream to an open symmetric key, if the correct key has been decrypted and is open. This key is then used to perform decryption and return the data. If the correct key is not open, NULL is returned.

For an example that shows how to work with encrypted data in a database, see Encrypt a Column of Data.

External Resources

For more information on data encryption, see the following resources.

| Resource | Description |

|---|---|

| SQL Server Encryption | Provides an overview of encryption in SQL Server. This topic includes links to additional articles. |

| Encryption Hierarchy | Provides an overview of encryption in SQL Server. This topic provides links to additional articles. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- Authentication in SQL Server

- Server and Database Roles in SQL Server

- Ownership and User-Schema Separation in SQL Server

- Authorization and Permissions in SQL Server

- ADO.NET Managed Providers and DataSet Developer Center

CLR Integration Security in SQL Server

Microsoft SQL Server provides the integration of the common language runtime (CLR) component of the .NET Framework. CLR integration allows you to write stored procedures, triggers, user-defined types, user-defined functions, user-defined aggregates, and streaming table-valued functions, using any .NET Framework language, such as Microsoft Visual Basic .NET or Microsoft Visual C#.

The CLR supports a security model called code access security (CAS) for managed code. In this model, permissions are granted to assemblies based on evidence supplied by the code in metadata. SQL Server integrates the user-based security model of SQL Server with the code access-based security model of the CLR.

External Resources

For more information on CLR integration with SQL Server, see the following resources.

| Resource | Description |

|---|---|

| Code Access Security | Contains topics describing CAS in the .NET Framework. |

| CLR Integration Security | Discusses the security model for managed code executing inside of SQL Server. |

See also

- Securing ADO.NET Applications

- Application Security Scenarios in SQL Server

- SQL Server Common Language Runtime Integration

- ADO.NET Overview

Application Security Scenarios in SQL Server

There is no single correct way to create a secure SQL Server client application. Every application is unique in its requirements, deployment environment, and user population. An application that is reasonably secure when it is initially deployed can become less secure over time. It is impossible to predict with any accuracy what threats may emerge in the future.

SQL Server, as a product, has evolved over many versions to incorporate the latest security features that enable developers to create secure database applications. However, security doesn't come in the box; it requires continual monitoring and updating.

Common Threats

Developers need to understand security threats, the tools provided to counter them, and how to avoid self-inflicted security holes. Security can best be thought of as a chain, where a break in any one link compromises the strength of the whole. The following list includes some common security threats that are discussed in more detail in the topics in this section.

SQL Injection

SQL Injection is the process by which a malicious user enters Transact-SQL statements instead of valid input. If the input is passed directly to the server without being validated and if the application inadvertently executes the injected code, then the attack has the potential to damage or destroy data. You can thwart SQL Server injection attacks by using stored procedures and parameterized commands, avoiding dynamic SQL, and restricting permissions on all users.

Elevation of Privilege

Elevation of privilege attacks occur when a user is able to assume the privileges of a trusted account, such as an owner or administrator. Always run under least-privileged user accounts and assign only needed permissions. Avoid using administrative or owner accounts for executing code. This limits the amount of damage that can occur if an attack succeeds. When performing tasks that require additional permissions, use procedure signing or impersonation only for the duration of the task. You can sign stored procedures with certificates or use impersonation to temporarily assign permissions.

Probing and Intelligent Observation

A probing attack can use error messages generated by an application to search for security vulnerabilities. Implement error handling in all procedural code to prevent SQL Server error information from being returned to the end user.

Authentication

A connection string injection attack can occur when using SQL Server logins if a connection string based on user input is constructed at run time. If the connection string is not checked for valid keyword pairs, an attacker can insert extra characters, potentially accessing sensitive data or other resources on the server. Use Windows authentication wherever possible. If you must use SQL Server logins, use the SqlConnectionStringBuilder to create and validate connection strings at run time.

Passwords

Many attacks succeed because an intruder was able to obtain or guess a password for a privileged user. Passwords are your first line of defense against intruders, so setting strong passwords is essential to the security of your system. Create and enforce password policies for mixed mode authentication.

Always assign a strong password to the sa account, even when using Windows Authentication.

In This Section

Managing Permissions with Stored Procedures in SQL Server

Describes how to use stored procedures to manage permissions and control data access. Using stored procedures is an effective way to respond to many security threats.

Writing Secure Dynamic SQL in SQL Server

Describes techniques for writing secure dynamic SQL using stored procedures.

Signing Stored Procedures in SQL Server

Describes how to sign a stored procedure with a certificate to enable users to work with data they do not have direct access to. This enables stored procedures to perform operations that the caller does not have permissions to perform directly.

Customizing Permissions with Impersonation in SQL Server

Describes how to use the EXECUTE AS clause to impersonate another user. Impersonation switches the execution context from the caller to the specified user.

Granting Row-Level Permissions in SQL Server

Describes how to implement row-level permissions to restrict data access.

Creating Application Roles in SQL Server

Describes features and functionality of application roles.

Enabling Cross-Database Access in SQL Server

Describes how to enable cross-database access without jeopardizing security.

See also

- SQL Server Security

- Overview of SQL Server Security

- Securing ADO.NET Applications

- ADO.NET Managed Providers and DataSet Developer Center

Managing Permissions with Stored Procedures in SQL Server

One method of creating multiple lines of defense around your database is to implement all data access using stored procedures or user-defined functions. You revoke or deny all permissions to underlying objects, such as tables, and grant EXECUTE permissions on stored procedures. This effectively creates a security perimeter around your data and database objects.

Stored Procedure Benefits

Stored procedures have the following benefits:

-

Data logic and business rules can be encapsulated so that users can access data and objects only in ways that developers and database administrators intend.

-

Parameterized stored procedures that validate all user input can be used to thwart SQL injection attacks. If you use dynamic SQL, be sure to parameterize your commands, and never include parameter values directly into a query string.

-

Ad hoc queries and data modifications can be disallowed. This prevents users from maliciously or inadvertently destroying data or executing queries that impair performance on the server or the network.

-

Errors can be handled in procedure code without being passed directly to client applications. This prevents error messages from being returned that could aid in a probing attack. Log errors and handle them on the server.

-

Stored procedures can be written once, and accessed by many applications.

-

Client applications do not need to know anything about the underlying data structures. Stored procedure code can be changed without requiring changes in client applications as long as the changes do not affect parameter lists or returned data types.

-

Stored procedures can reduce network traffic by combining multiple operations into one procedure call.

Stored Procedure Execution

Stored procedures take advantage of ownership chaining to provide access to data so that users do not need to have explicit permission to access database objects. An ownership chain exists when objects that access each other sequentially are owned by the same user. For example, a stored procedure can call other stored procedures, or a stored procedure can access multiple tables. If all objects in the chain of execution have the same owner, then SQL Server only checks the EXECUTE permission for the caller, not the caller's permissions on other objects. Therefore you need to grant only EXECUTE permissions on stored procedures; you can revoke or deny all permissions on the underlying tables.

Best Practices

Simply writing stored procedures isn't enough to adequately secure your application. You should also consider the following potential security holes.

-

Grant EXECUTE permissions on the stored procedures for database roles you want to be able to access the data.

-

Revoke or deny all permissions to the underlying tables for all roles and users in the database, including the public role. All users inherit permissions from public. Therefore denying permissions to public means that only owners and sysadmin members have access; all other users will be unable to inherit permissions from membership in other roles.

-

Do not add users or roles to the sysadmin or db_owner roles. System administrators and database owners can access all database objects.

-

Disable the guest account. This will prevent anonymous users from connecting to the database. The guest account is disabled by default in new databases.

-

Implement error handling and log errors.

-

Create parameterized stored procedures that validate all user input. Treat all user input as untrusted.

-

Avoid dynamic SQL unless absolutely necessary. Use the Transact-SQL QUOTENAME() function to delimit a string value and escape any occurrence of the delimiter in the input string.

External Resources

For more information, see the following resources.

| Resource | Description |

|---|---|

| Stored Procedures and SQL Injection in SQL Server Books Online | Topics describe how to create stored procedures and how SQL Injection works. |

See also

- Securing ADO.NET Applications

- Overview of SQL Server Security

- Application Security Scenarios in SQL Server

- Writing Secure Dynamic SQL in SQL Server

- Signing Stored Procedures in SQL Server

- Customizing Permissions with Impersonation in SQL Server

- Modifying Data with Stored Procedures

- ADO.NET Managed Providers and DataSet Developer Center

Writing Secure Dynamic SQL in SQL Server

SQL Injection is the process by which a malicious user enters Transact-SQL statements instead of valid input. If the input is passed directly to the server without being validated and if the application inadvertently executes the injected code, the attack has the potential to damage or destroy data.

Any procedure that constructs SQL statements should be reviewed for injection vulnerabilities because SQL Server will execute all syntactically valid queries that it receives. Even parameterized data can be manipulated by a skilled and determined attacker. If you use dynamic SQL, be sure to parameterize your commands, and never include parameter values directly into the query string.

Anatomy of a SQL Injection Attack

The injection process works by prematurely terminating a text string and appending a new command. Because the inserted command may have additional strings appended to it before it is executed, the malefactor terminates the injected string with a comment mark "--". Subsequent text is ignored at execution time. Multiple commands can be inserted using a semicolon (;) delimiter.

As long as injected SQL code is syntactically correct, tampering cannot be detected programmatically. Therefore, you must validate all user input and carefully review code that executes constructed SQL commands in the server that you are using. Never concatenate user input that is not validated. String concatenation is the primary point of entry for script injection.

Here are some helpful guidelines:

-

Never build Transact-SQL statements directly from user input; use stored procedures to validate user input.

-

Validate user input by testing type, length, format, and range. Use the Transact-SQL QUOTENAME() function to escape system names or the REPLACE() function to escape any character in a string.

-

Implement multiple layers of validation in each tier of your application.

-

Test the size and data type of input and enforce appropriate limits. This can help prevent deliberate buffer overruns.

-

Test the content of string variables and accept only expected values. Reject entries that contain binary data, escape sequences, and comment characters.

-

When you are working with XML documents, validate all data against its schema as it is entered.

-

In multi-tiered environments, all data should be validated before admission to the trusted zone.

-

Do not accept the following strings in fields from which file names can be constructed: AUX, CLOCK,COM1throughCOM8,CON,CONFIG

-

, LPT1 through LPT8, NUL, and PRN.

-

Use SqlParameter objects with stored procedures and commands to provide type checking and length validation.

-

Use Regex expressions in client code to filter invalid characters.

Dynamic SQL Strategies

Executing dynamically created SQL statements in your procedural code breaks the ownership chain, causing SQL Server to check the permissions of the caller against the objects being accessed by the dynamic SQL.

SQL Server has methods for granting users access to data using stored procedures and user-defined functions that execute dynamic SQL.

-

Using impersonation with the Transact-SQL EXECUTE AS clause, as described in Customizing Permissions with Impersonation in SQL Server.

-

Signing stored procedures with certificates, as described in Signing Stored Procedures in SQL Server.

EXECUTE AS